New: WildDash 2 with 4256 public frames, new labels & panoptic GT!

See also: RailSem19 dataset for rail scene understanding.

Welcome to the WildDash 2 Benchmark. This website provides a dataset and benchmark for panoptic, semantic, and instance segmentation. We aim to improve the expressiveness of performance evaluation for computer vision algorithms in regard to their robustness for driving scenarios under real-world conditions. In addition to the WildDash dataset, wilddash.cc also hosts the railway and tram dataset RailSem19, a large dataset for training semantic scene understanding of railway scenes: RailSem19.

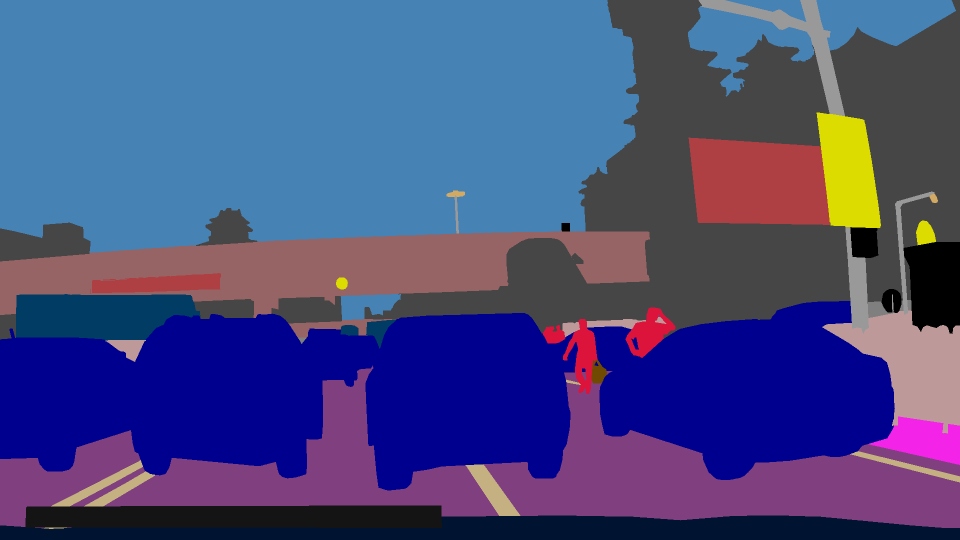

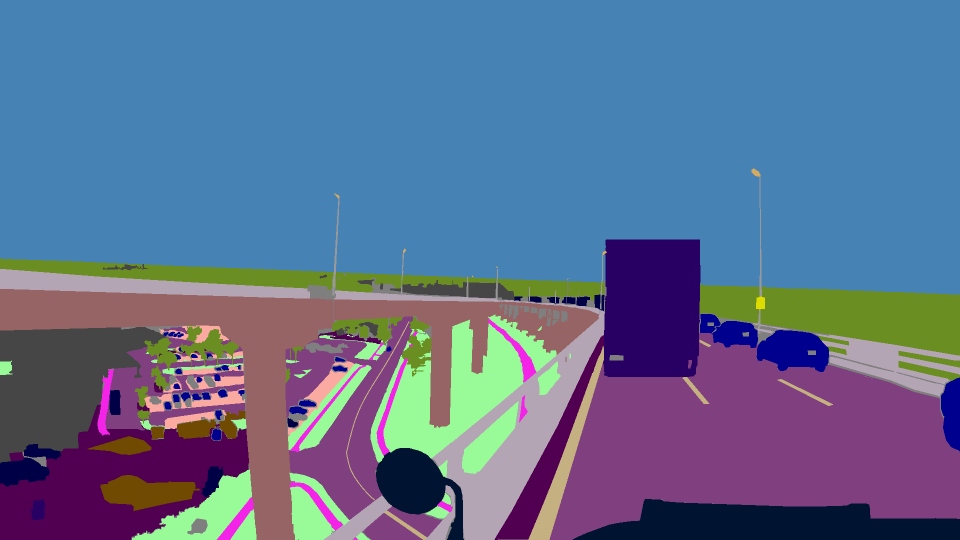

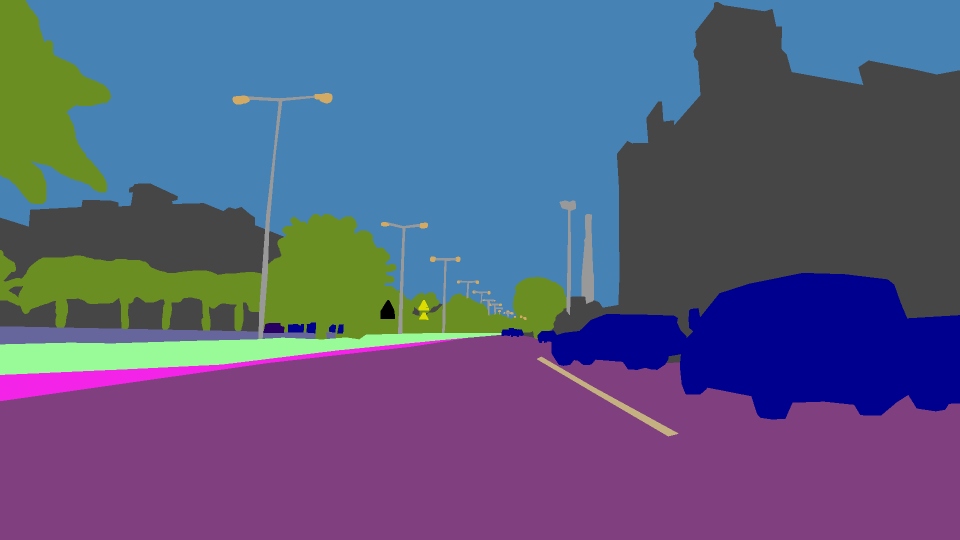

We include images from a variety of data sources from all over the world with many different difficult scenarios (e.g. rain, road coverage, darkness, overexposure) and camera characteristics (noise, compression artifacts, distortion). The supplied ground truth format is compatible with Cityscapes. WildDash 2 additionally introduces support for new labels, such as: van, pickup, street light, billboard, road markings, and ego-vehicle.

5000+ traffic scenarios

city, highway & rural locations

from more than 100 countries

poor weather conditions

The main focus of this dataset is testing. It contains data recorded under real world driving situations. Aims of it are:

The WildDash dataset does not offer enough material to train algorithms by itself. We suggest you use a mixture of material from the Apollo Scape, Audi A2D2, Berkeley DeepDrive(BDD)/Nexar, Cityscapes, India Driving Dataset, KITTI , and Mapillary datasets for training and the WildDash data for validation and testing.